Communicating User Interface Issues: Why CCs and Ds Really Matter

Sep 16, 2025

Communicating User Interface Issues: Why CCs and Ds Really Matter

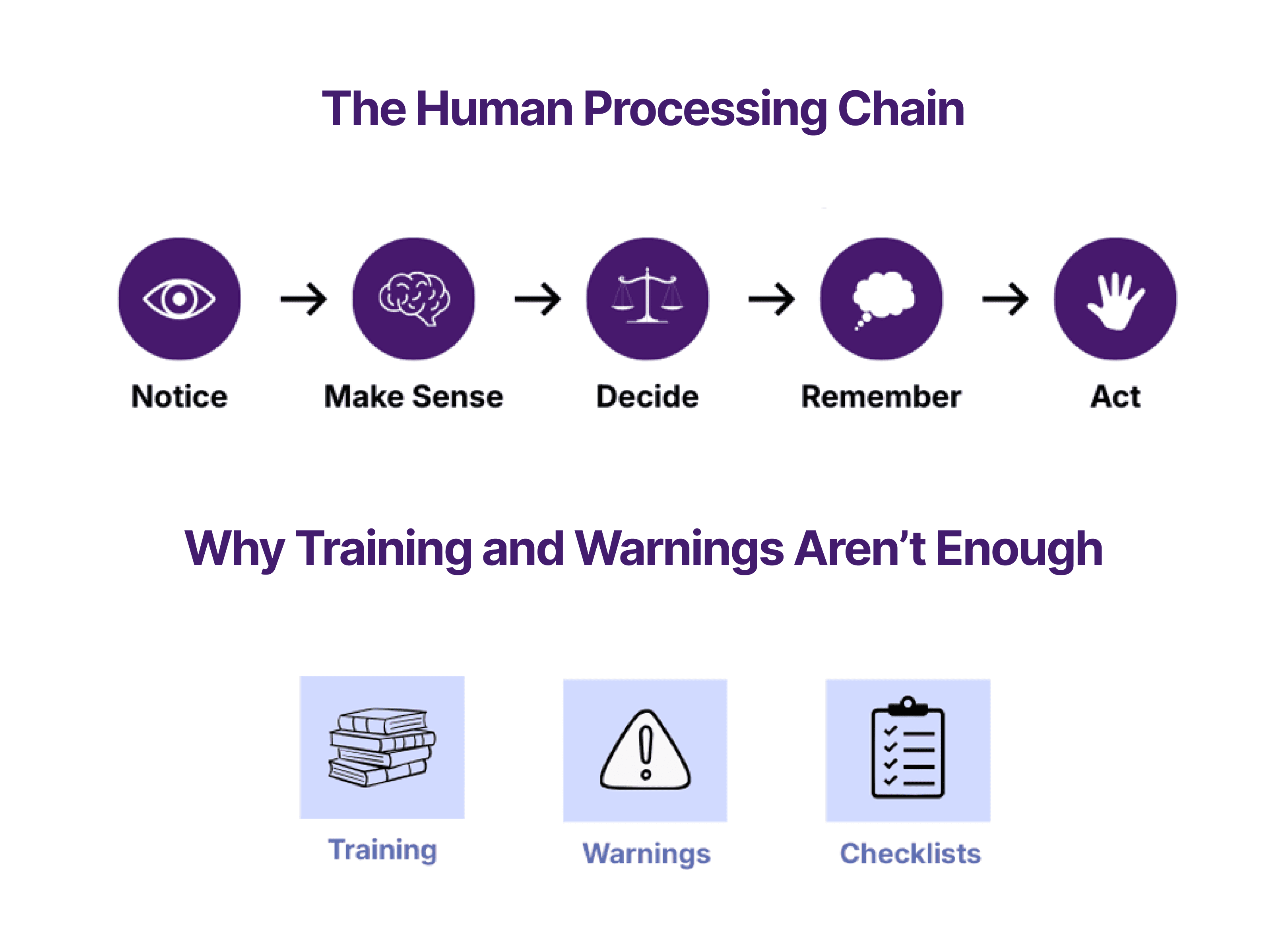

In the medical device industry, Human Factors evaluations are critical for ensuring patient safety and device effectiveness. However, there's a tendency to focus exclusively on use errors while dismissing two equally important categories: close calls (CCs) and use difficulties (Ds). This narrow focus can lead to significant blind spots in device design and potentially compromise patient outcomes.

Understanding the Full Spectrum of User Interface Issues

Use errors represent the tip of the iceberg - instances where users fail to complete tasks or perform them incorrectly, potentially leading to harm. While these are undoubtedly critical, they represent only the most severe indicators of underlying interface problems. CCs and Ds provide crucial insights into the user experience that can prevent future errors and improve overall device usability and user experience.

Close calls (CCs) occur when users nearly make an error but catch themselves or are prevented by system safeguards. These events reveal vulnerable points in the interface where users are prone to mistakes, even if they don't ultimately occur. Use difficulties (Ds) represent struggles or inefficiencies in task completion that don't result in errors but indicate sub-optimal design that could lead to user frustration, delayed treatment, or increased cognitive load.

Both CCs and Ds indicate that a use error could potentially occur in the future, during the completion of a task. During simulated use testing, participants are often more careful and attentive, whilst in real-world use, this may not always be the case. Therefore, any indication of use-related issues should be fully considered.

The Hidden Dangers of Ignoring CCs and Ds

Below we explore theoretical examples of the potential consequences of ignoring CCs and Ds that could have occurred in studies.

Seemingly minor issues can cascade into major problems. In blood glucose monitoring systems, users might consistently squint or adjust lighting when reading results, indicating display visibility problems. While they ultimately obtain correct readings, this use difficulty suggests the device fails under common environmental conditions. For patients with diabetes-related vision complications, these difficulties could lead to misreading results and inappropriate insulin dosing.

Digital thermometers offer another illustration: healthcare workers might repeatedly check and re-check readings because the display seems ambiguous or confirmation feedback is unclear. Though final temperature recordings are accurate, this behaviour pattern signals user uncertainty about result validity. In busy clinical environments, this lack of confidence could lead to repeated measurements, workflow delays, or worse - accepting incorrect readings when users eventually stop double-checking due to time pressure.

Similarly, if healthcare providers consistently struggle to navigate through multiple screens to access critical patient information on a monitoring device, this difficulty might not register as a "failure" in traditional evaluations. Yet this inefficiency could delay critical interventions, increase provider frustration, potentially impacting the commercialization of the device, and ultimately affect patient care quality.

Ventilator systems present a troubling scenario. During usability testing, respiratory therapists might struggle to locate alarm acknowledgment buttons, taking several extra seconds to silence critical alerts. Though they eventually succeed, this use difficulty suggests poor information architecture. In an ICU setting with multiple simultaneous alarms, these extra seconds compound, potentially leading to alarm fatigue or delayed responses to genuine emergencies.

Theoretical Implications in Medical Device Design

Consider these representative scenarios that illustrate how focusing solely on use errors can prove insufficient. In the hypothetical case of insulin pen devices, users might frequently experience difficulty reading dose markings in low-light conditions. While users eventually succeed in setting correct doses (avoiding use errors), this difficulty could lead to delayed treatments and user anxiety. Only by recognizing these use difficulties could manufacturers implement solutions like improved backlighting and larger, high-contrast displays.

Imagine surgical navigation systems where surgeons successfully complete procedures despite struggling with unintuitive menu structures or confusing iconography. These theoretical close call moments, where surgeons nearly select incorrect tools or imaging modes, could reveal critical interface vulnerabilities that could lead to surgical complications under different circumstances.

Even in home-use devices, the theoretical implications are significant. Patients using continuous glucose monitors might repeatedly attempt to insert sensors because tactile feedback is inadequate, leading to wasted supplies and potential skin trauma. While they eventually achieve successful insertion, this hypothetical use difficulty suggests design improvements that could enhance user confidence and reduce complications.

The Regulatory Perspective

While FDA guidance emphasizes the importance of identifying and mitigating use errors, it is widely recognized by experts within the Human Factors community that CCs and Ds provide essential context for understanding user behaviour and system vulnerabilities.

IEC 62366 standard acknowledges that the goal isn't merely to eliminate use errors but to create interfaces that support safe and effective use under all conditions.

Minimizing the importance of CCs and Ds can lead to a false sense of security. A device that passes validation testing with minimal use errors but generates numerous close calls and difficulties is not truly a ‘pass’, but also potentially a disaster waiting to happen once deployed in real-world clinical environments with their inherent stresses and complexities.

Building a Comprehensive Evaluation Framework

Effective Human Factors evaluations require capturing and analysing the full spectrum of user interface issues. This means implementing observation protocols that identify not just what users do wrong, but also where they hesitate, struggle, or express confusion. It requires measuring not only task success rates but also task completion times (where appropriate and applicable), error recovery patterns, and user confidence levels.

In some cases, CCs and Ds have been categorized as sub-sets of ‘success’, leading to them being ignored. Creating clearer categorization, whereby CCs and Ds are classed appropriately as use-related issues (or problems as the FDA indicates [1]), separate to those where it is a true success, will drive thorough investigation and root cause analysis into each CC and D observed in a study session. This will further warrant immediate attention even if no actual errors occur. Design teams should view these events as valuable intelligence about potential failure modes rather than acceptable performance variations.

Moving Forward

The medical device industry must evolve beyond the binary thinking of "error" versus "no error" in Human Factors evaluations. By embracing CCs and Ds as equally important indicators of interface quality, manufacturers can create devices that not only meet regulatory requirements but truly support the intended end user in delivering optimal care.

The stakes are too high to ignore the warning signs that CCs and Ds represent. Every CC is a potential future error, and every D is an opportunity for improvement. In an industry where interface design directly impacts patient outcomes, we cannot afford to overlook these critical insights. The path to truly safe and effective medical devices requires us to listen to what users are telling us through their struggles, not just their failures.

[1] FDA: Applying Human Factors and Usability Engineering to Medica Devices – Guidance for Industry and Food and Dug Administration Staff (2016)